👋 Hi, I am Zhangding Liu

I am a Ph.D. student in Computational Science and Engineering at Georgia Institute of Technology, advised by Prof. John E. Taylor. My research focuses on machine learning, multimodal learning, and large language models, with the goal of advancing AI capabilities in understanding, reasoning, and interacting with complex real-world environments.

I have collaborated with leading researchers and industry partners, including Lawrence Berkeley National Laboratory, Partnership for Innovation, and Emergency Services Departments, implementing AI for multimodal data analysis and disaster-aware resilience in smart city digital twins.

With expertise in machine learning, deep learning, and LLMs, as well as a strong background in engineering applications, I am passionate about leveraging AI to address real-world challenges.

I welcome research collaborations and academic discussions in related areas.

🚀 RESEARCH EXPERIENCE

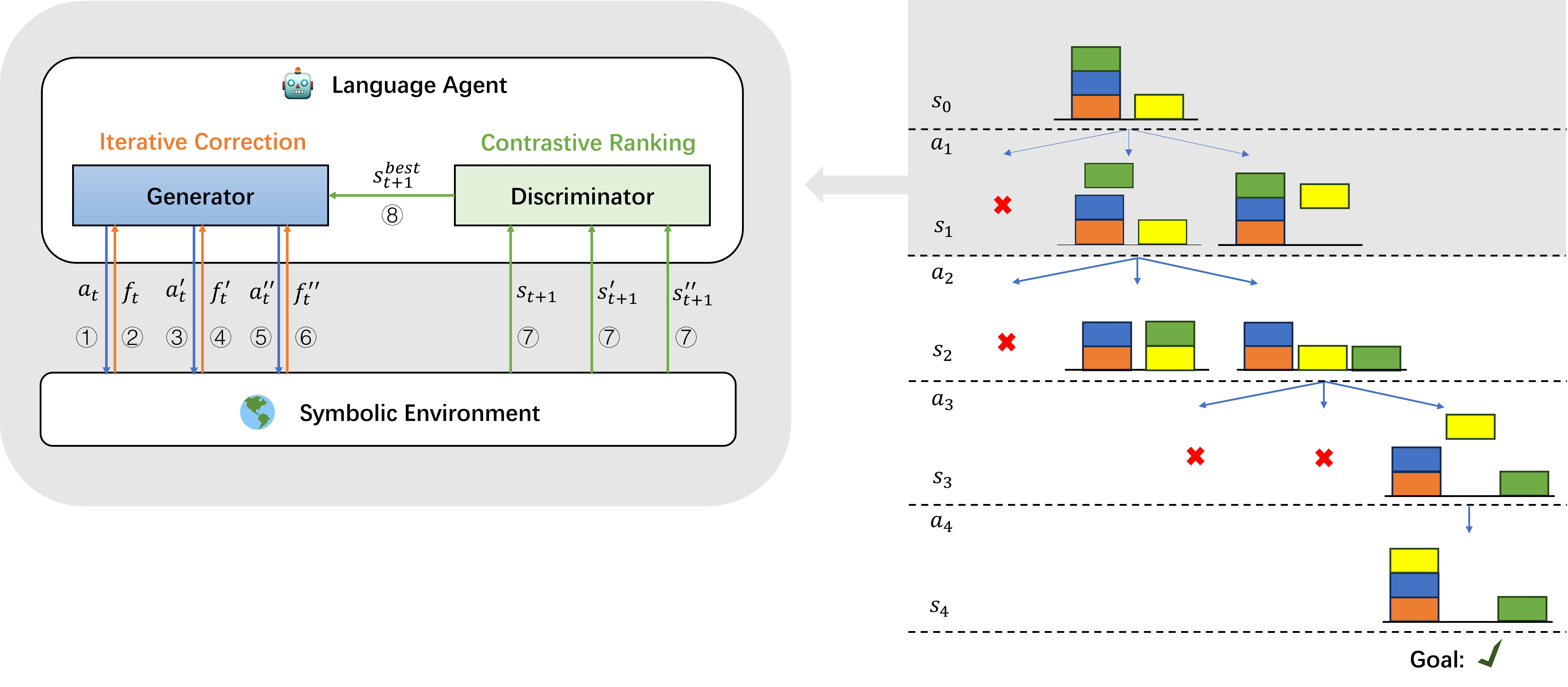

SymPlanner: Deliberate Planning with Symbolic Representations

| 2025 | Machine Learning, Planning, LLM |

- Developed SymPlanner, a framework augmenting LLMs with symbolic world models for multi-step planning. Introduced iterative correction and contrastive ranking to enhance reasoning reliability.

- Built a full pipeline with policy model, symbolic simulator, and discriminator, achieving up to 54% accuracy on PlanBench long-horizon tasks. Outperformed CoT, ToT, and RAP baselines by 2–3×.

[Paper]

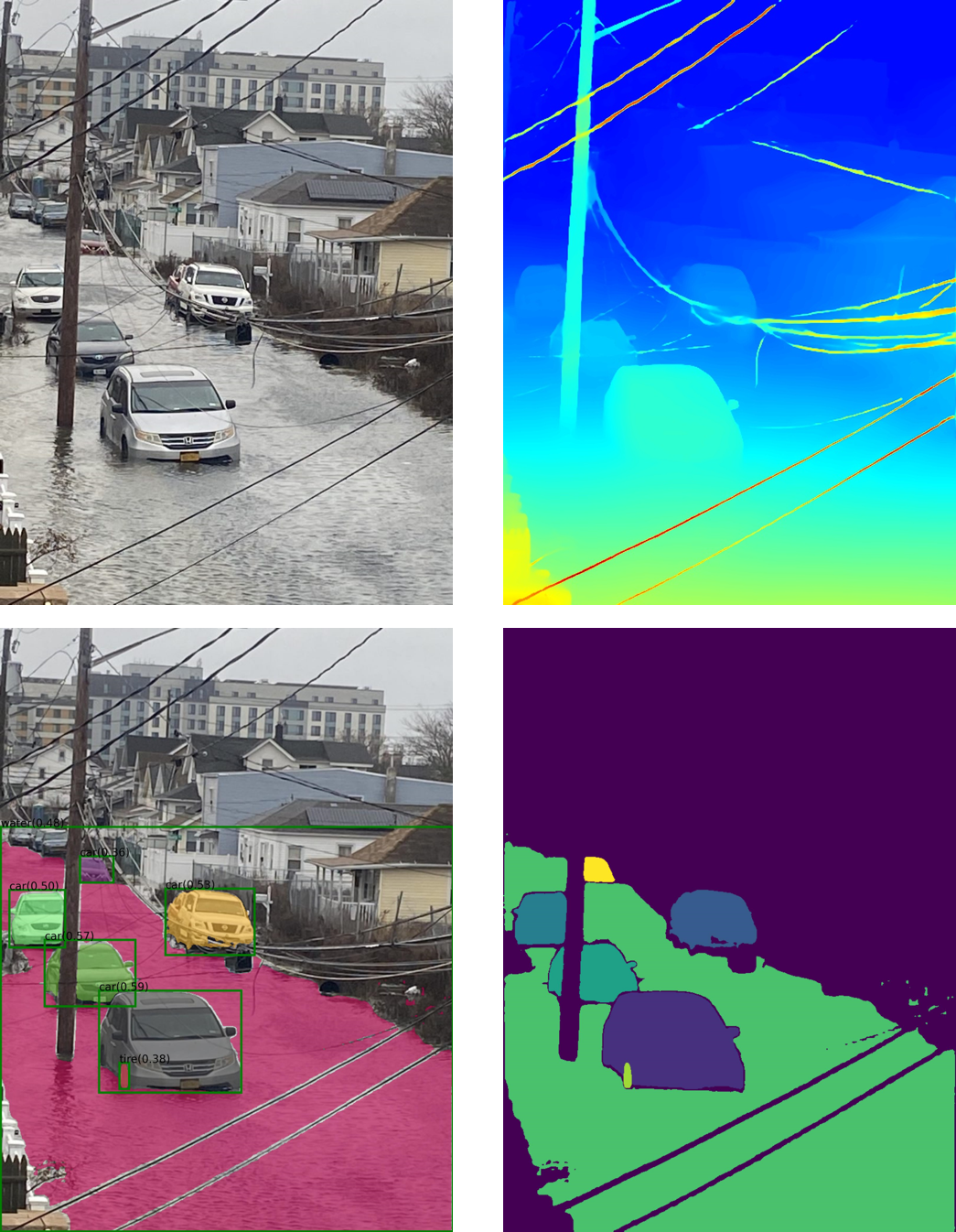

FloodVision: Urban Flood Depth Estimation Using Foundation Vision-Language Models and Domain Knowledge Graph

2025 | Computer Vision, Foundation Models, Smart Cities Research Assistant Intern · Partnership for Innovation

- Designed FloodVision, a retrieval-augmented multimodal framework combining GPT-4o with a curated flood knowledge base for image-based flood depth estimation.

- Architected an AI-enabled Digital Twin for Coastal Flood Resilience, integrating road-closure reports, roadside sensors, and camera feeds to support real-time street level flood monitoring and prediction for emergency response.

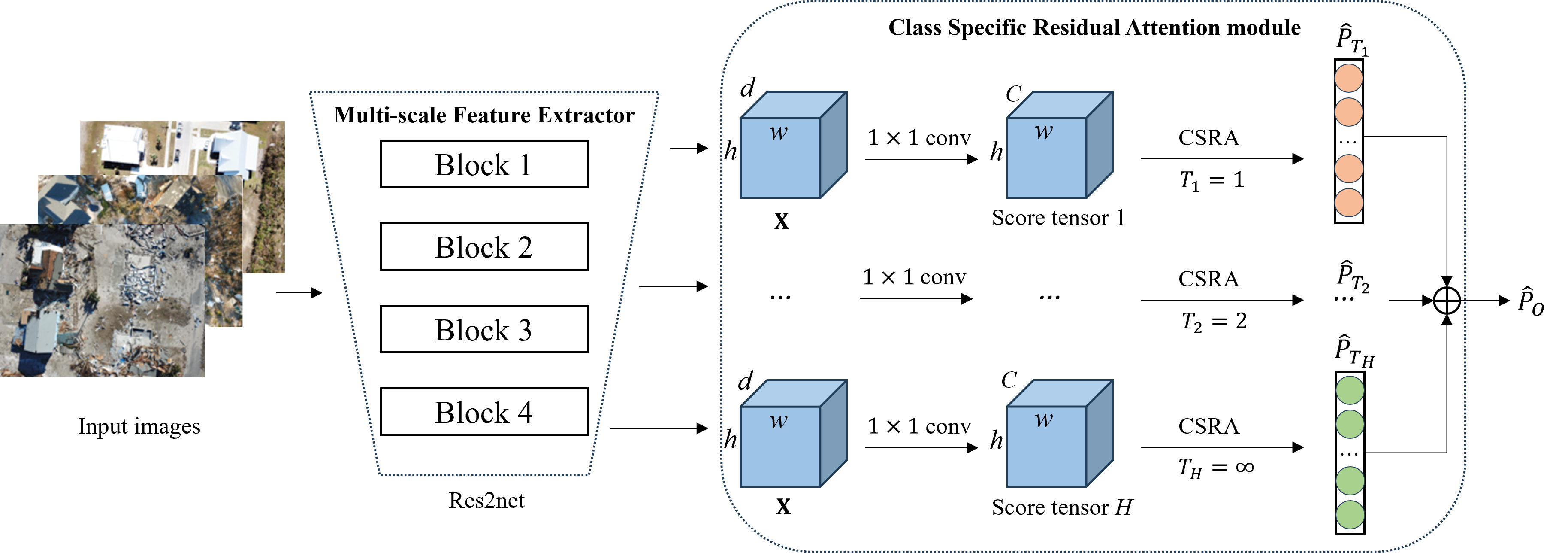

MCANet: Multi-Label Damage Classification for Rapid Post-Hurricane Damage Assessment with UAV Images

| 2025 | Computer Vision, Disaster Response, Deep Learning |

- Proposed MCANet, a Res2Net-based multi-scale framework with class-specific residual attention for post-hurricane damage classification.

- Achieved 92.35% mAP on the RescueNet UAV dataset, outperforming ResNet, ViT, EfficientNet, and other baselines.

- Enables fast, interpretable multi-label assessment of co-occurring damage types to support emergency response and digital twin systems.

[Paper]

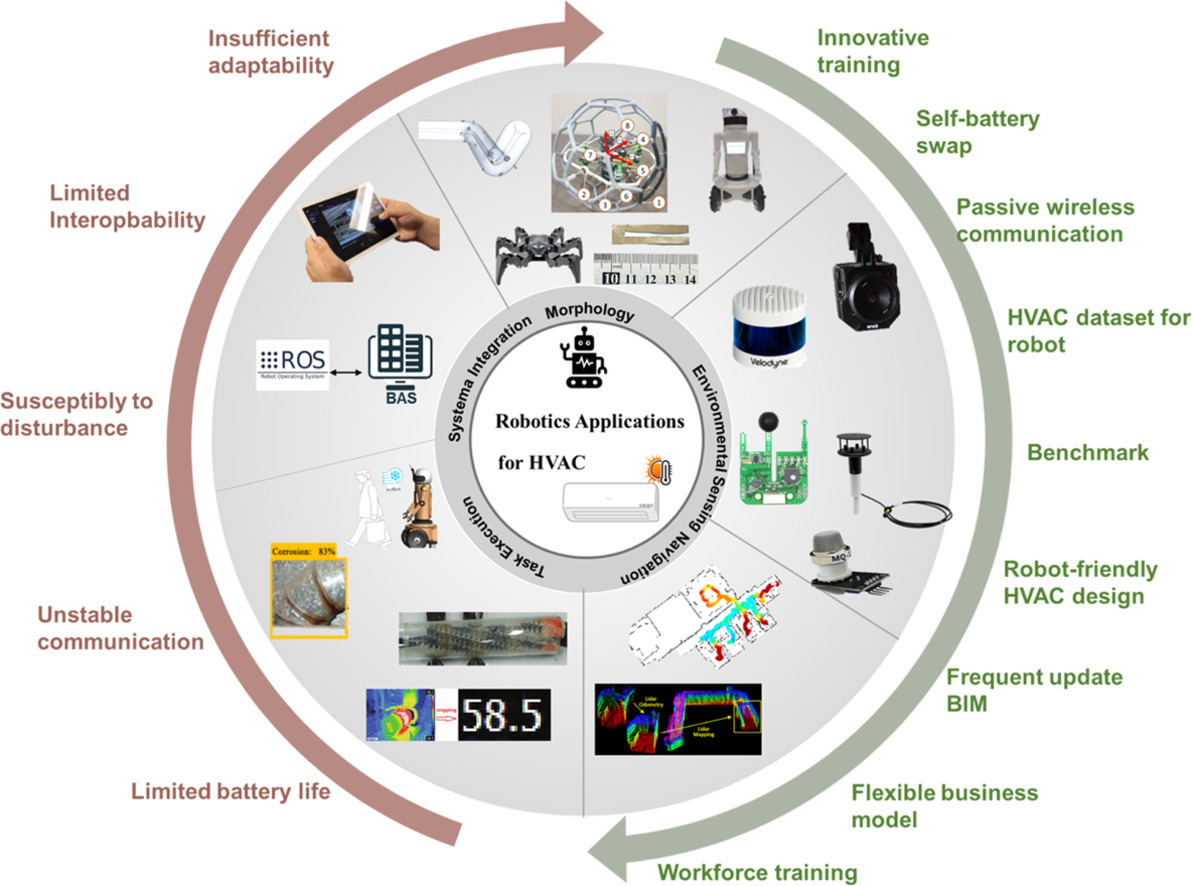

Heat Resilience Mapping and Robotics in HVAC

Research Assistant Intern · Lawrence Berkeley National Laboratory 2024 | Urban Heat Resilience, Robotics, HVAC Systems

- Developed a Heat Vulnerability Index (HVI) map for Oakland, integrating data on weather, demographics, health, and green spaces.

- Designed a web-based app in CityBES platform to visualize HVI data, enabling better urban heat resilience planning.

- Explored robotics applications in HVAC systems to enhance quality, safety, and efficiency in installation and maintenance processes.

[Paper]

AI for Epidemiological Modeling (AI.Humanity)

2024 | Machine Learning, Neural Network, SIR Parameter Calibration

- Proposed a machine learning–guided framework to calibrate disease transmission parameters by integrating urban infrastructure density and human mobility constraints.

- Reduced early-stage COVID-19 case prediction error (RMSE) by 46%, demonstrating the model’s robustness under sparse and noisy data conditions.

[Paper]

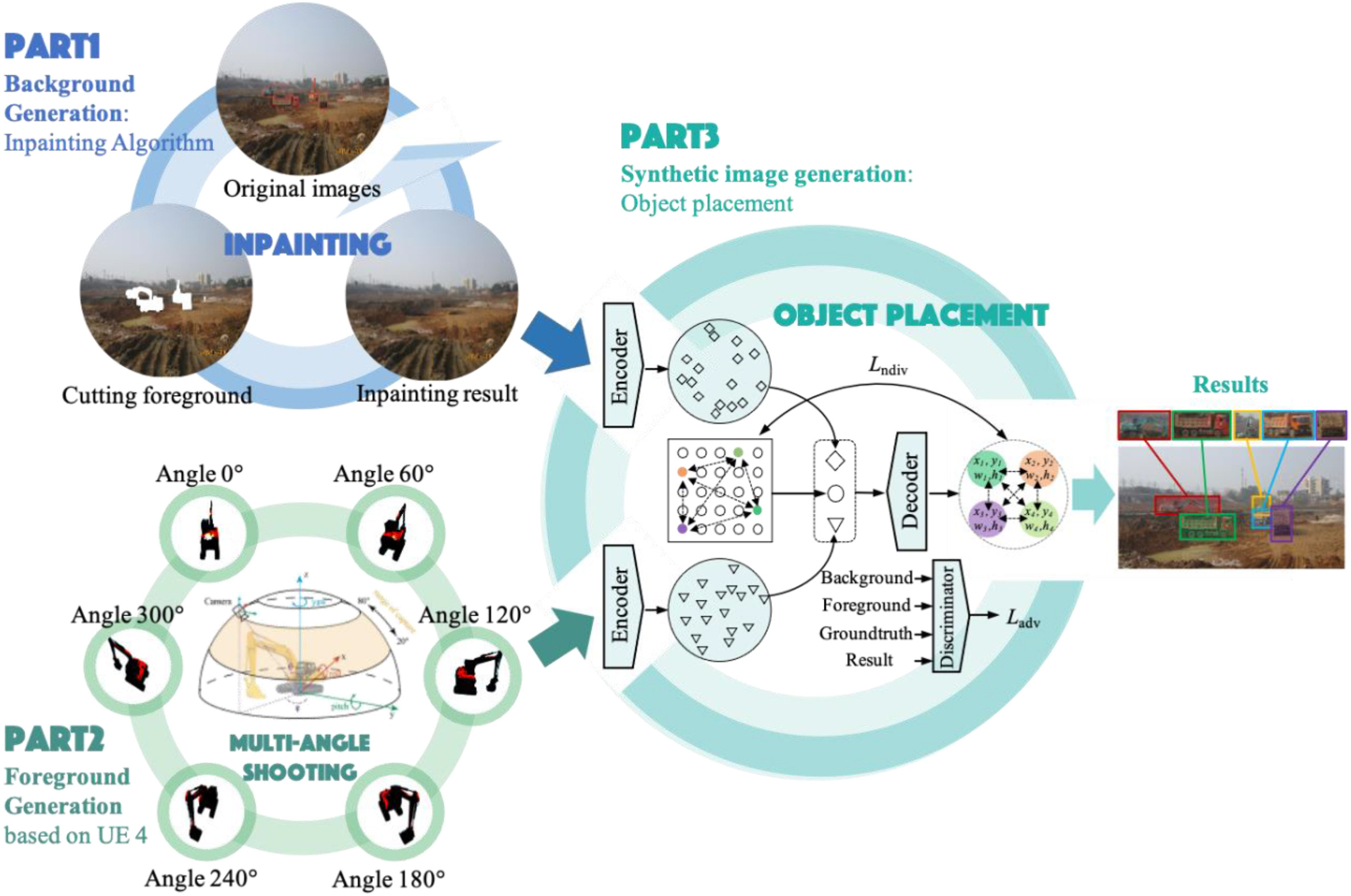

Synthetic Data for Smart Construction

| 2024 | Computer Vision, Synthetic Data, Construction AI |

UE4 + Transformer for Augmented Datasets

- Developed a context-aware synthetic image generation pipeline for construction machinery detection, integrating Swin Transformer into the PlaceNet framework to improve geometric consistency in object placement.

- Created the S-MOCS synthetic dataset with multi-angle foregrounds and context-aware object placement, achieving more robust detection of small and unusually oriented machinery, and outperforming real-world datasets by 2.1% mAP in object detection tasks.

[Paper]

📝 PUBLICATIONS

For a complete list of my publications, please visit my Google Scholar profile:

- Google Scholar: Google Scholar Profile

🎓 EDUCATION

🏆 HONORS AND AWARDS

-

Gilbert F. “Gil” Amelio Engineering Fellowship - Georgia Institute of Technology

-

First-Class Scholarship - Tongji University

📬 CONTACT

- Email: zliu952 (replace “AT” with “@”) gatech (replace “DOT” with “.”) edu

- LinkedIn: linkedin.com/in/zhangding